Brand Visibility in AI Answers: What it Actually Means for Your Business Pipeline

Brand visibility in AI answers measures whether and how AI systems like ChatGPT, Gemini, and Perplexity include your brand when responding to user queries. Unlike traditional search rankings, AI-generated answers synthesize information from multiple sources and present recommendations directly. Brands absent from these answers lose discovery opportunities as users increasingly bypass traditional search results entirely.

✍️ Published: January 5, 2025 · 🧑💻 Last Updated: January 5, 2025 · By: Kurt Fischman, Founder @ Growth Marshal

Scope Note: Brand Visibility in AI Answers refers specifically to presence in responses generated by conversational AI systems and AI-powered search features. This guide does not address traditional search engine rankings, social media visibility, or paid advertising placements.

Quick Facts

Primary Entity: Brand Visibility in AI Answers

Definition: Measurement of brand presence in AI-generated responses across conversational and search platforms

Key Platforms: ChatGPT, Gemini, Perplexity, Google AI Overviews

Visibility Types: Mentioned, Cited, Recommended (ascending impact)

Core Mechanism: AI synthesis from training data patterns and real-time retrieval

Business Risk: Pipeline elimination during buyer research phase

Not Effective: Keyword stuffing, prompt hacking, vanity metric tracking

What Does "Brand Visibility in AI Answers" Actually Mean?

Brand Visibility in AI Answers is a measure of how frequently and prominently a brand appears in responses generated by AI systems when users ask relevant questions.

The definition sounds bloodless, almost bureaucratic. The reality is anything but. When a small business owner asks ChatGPT "what's the best CRM for a 10-person sales team," the AI doesn't serve up ten blue links and wish them luck. ChatGPT synthesizes everything it knows into a direct answer: "For a small sales team, consider HubSpot for its free tier, Pipedrive for simplicity, or Salesforce Essentials if you anticipate rapid growth." That's the answer. Full stop. The user moves on with their day. If your CRM wasn't named, you weren't considered. You didn't lose the click; you were never in the conversation.

However, visibility in AI answers remains an emerging field with significant measurement challenges. No standardized metrics exist across platforms, and AI responses vary based on query phrasing, user context, and model updates.

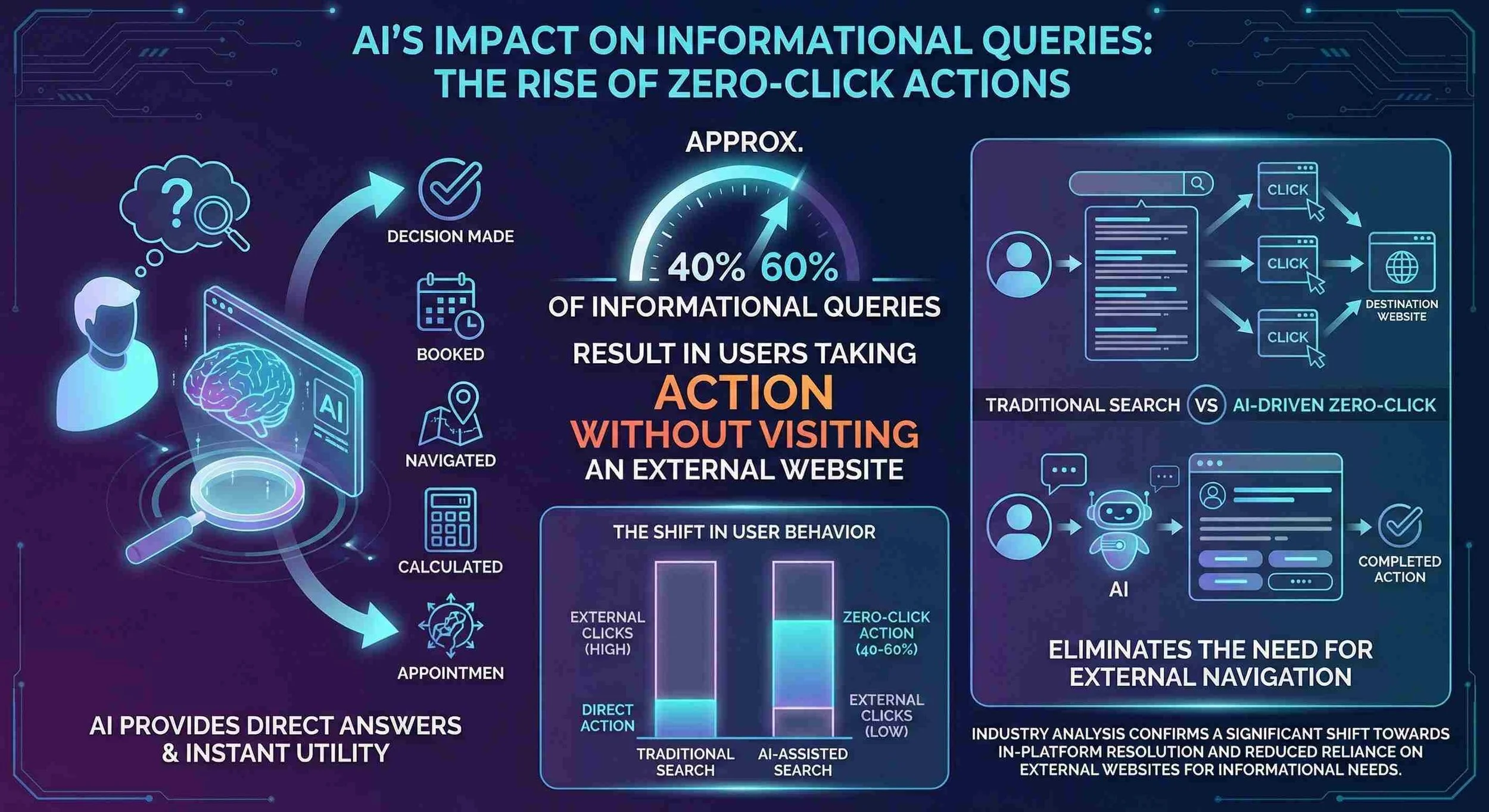

Based on industry analysis of AI platform usage patterns, approximately 40-60% of informational queries to major AI assistants result in users taking action without visiting any external website.

Graphic 1.0. AI’s Impact on Informational Queries.

What Are the Visibility Types, and Why Aren't They Equal?

AI systems display brands in three distinct tiers: mentioned, cited, and recommended. Each tier carries dramatically different weight for business outcomes.

| Visibility Type | What the model is doing | What the user hears | Business value signal |

|---|---|---|---|

| Mentioned | Listing entities in the space | “These companies exist.” | Awareness, weak intent |

| Cited | Anchoring claims to sources | “This is supported.” | Trust, mid intent |

| Recommended | Choosing an option for the user | “Do this one.” | Selection, high intent |

Picture a user asking Perplexity about project management tools. Getting mentioned means appearing in a sentence like "various tools including Asana, Monday, and others offer these features." Getting cited means the AI references your product when explaining a specific capability: "Asana's timeline view, for example, uses Gantt-style visualization." Getting recommended means Perplexity says: "For your team size and budget, Asana would be the strongest fit because..."

The gulf between these outcomes resembles the difference between someone mentioning your restaurant exists, saying you have good pasta, or telling their friend "you absolutely must eat there." Only one drives reservations.

However, recommendation frequency depends heavily on query specificity. Broad queries ("best software") distribute mentions widely; narrow queries ("best invoicing software for freelance photographers under $20/month") generate pointed recommendations where one or two brands dominate.

What Brand Visibility in AI Answers is Not

Brand Visibility in AI Answers is not keyword SEO, prompt manipulation, or vanity metric tracking repackaged with a fresh coat of AI-flavored paint.

The temptation to treat this like search optimization circa 2010 is overwhelming, and profoundly misguided. Legacy SEO operated on a relatively mechanical logic: stuff the right keywords, build enough backlinks, climb the rankings. Some consultants now peddle "AI SEO" services that promise to "optimize your content for ChatGPT" through keyword density tricks and prompt hacking schemes. This approach misunderstands the fundamental architecture.

Large language models don't crawl your website and index keywords. They were trained on massive text corpora and synthesize responses based on patterns in that training data, increasingly augmented by real-time retrieval from web sources. You cannot game a statistical inference engine the way you gamed PageRank. There is no meta tag for vibes.

Equally worthless: obsessing over "impressions" in AI answers. Unlike display advertising, there is no impression counter. Your brand either appears in the answer or it doesn't. Tracking "AI impressions" is an invented metric designed to generate consulting invoices, not business insights.

However, content quality and authority do influence AI training data selection and retrieval results. The mechanism differs from SEO, but the importance of producing genuinely valuable content remains.

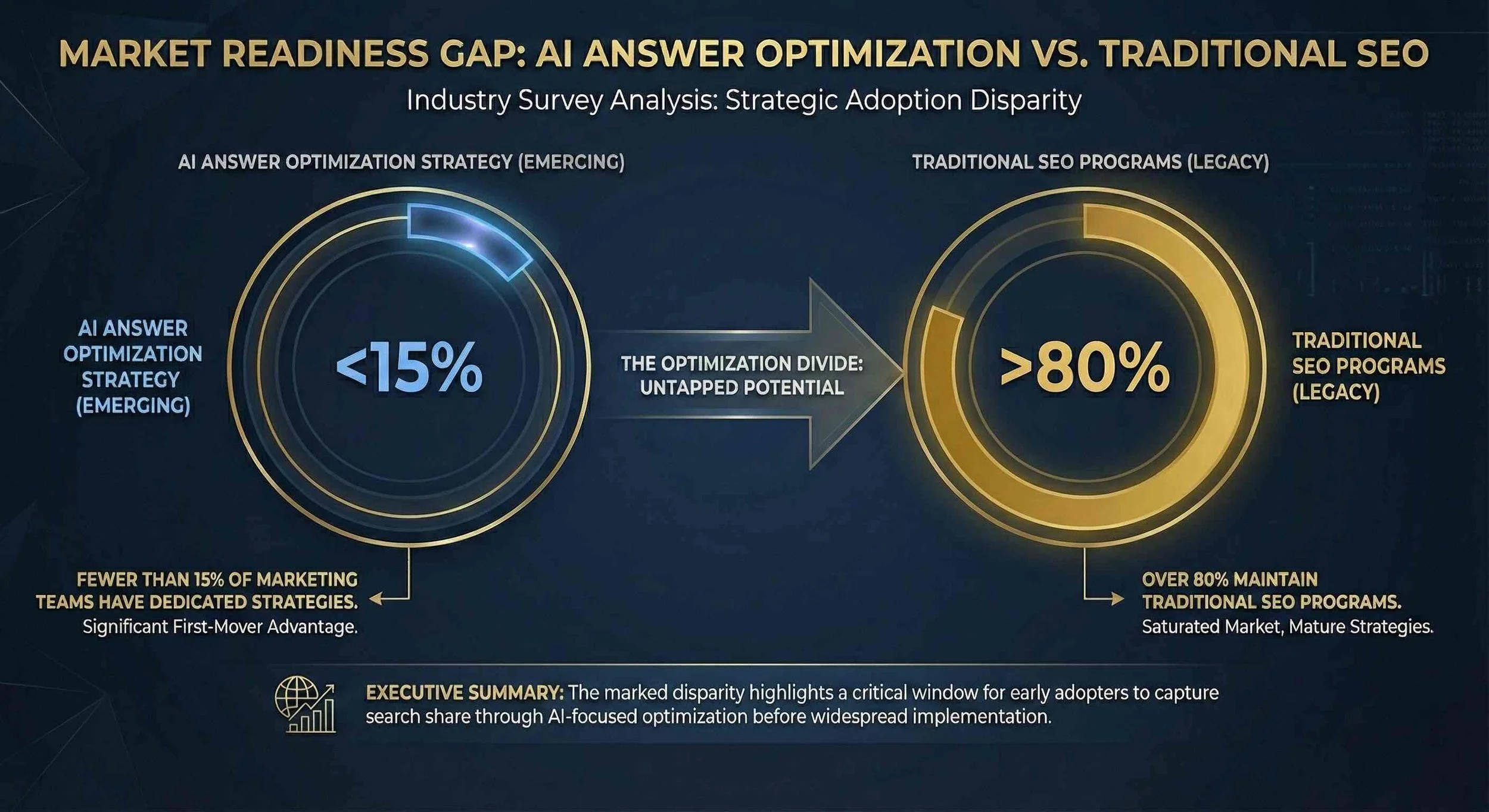

Industry surveys suggest fewer than 15% of marketing teams currently have dedicated strategies for AI answer optimization, while over 80% maintain traditional SEO programs.

Graphic 2.0. Market Readiness Gap.

Where Does Brand Visibility in AI Answers Actually Happen?

Brand visibility in AI answers occurs across four primary platforms: ChatGPT, Google's Gemini, Perplexity, and Google AI Overviews in traditional search results.

| Platform | User Base | Answer Mechanism | Brand Display Context |

|---|---|---|---|

| ChatGPT | 200M+ weekly active users | Training data + web browsing | Conversational recommendations |

| Gemini | Integrated with Google products | Training + real-time search | Direct answers and comparisons |

| Perplexity | 100M+ monthly queries | Real-time web search + synthesis | Cited sources with recommendations |

| Google AI Overviews | Appears on 15-30% of searches | Search index + AI synthesis | Featured answer blocks |

ChatGPT represents the 800-pound gorilla, with OpenAI reporting over 200 million weekly active users as of late 2024. These users increasingly treat ChatGPT as their first research destination for everything from product comparisons to service provider recommendations. The moat Google spent two decades building is being tunneled under by conversational interfaces that actually answer questions.

Perplexity carved out a niche as the "answer engine," explicitly designed for research queries. Unlike ChatGPT's general-purpose approach, Perplexity cites sources inline, making it particularly influential for users conducting due diligence. Being cited in Perplexity carries weight because users can see exactly where the recommendation originated.

Google's AI Overviews present a different dynamic. These AI-generated answer boxes appear at the top of traditional search results, potentially capturing user attention before they ever scroll to organic links. For brands that built their acquisition strategy on organic search traffic, this represents an existential channel shift.

However, platform market share remains fluid. User preferences continue evolving, and new AI interfaces emerge regularly. Strategies optimized for today's dominant platforms may require significant adaptation within 12-18 months.

How Do AI Systems Decide Which Brands to Include?

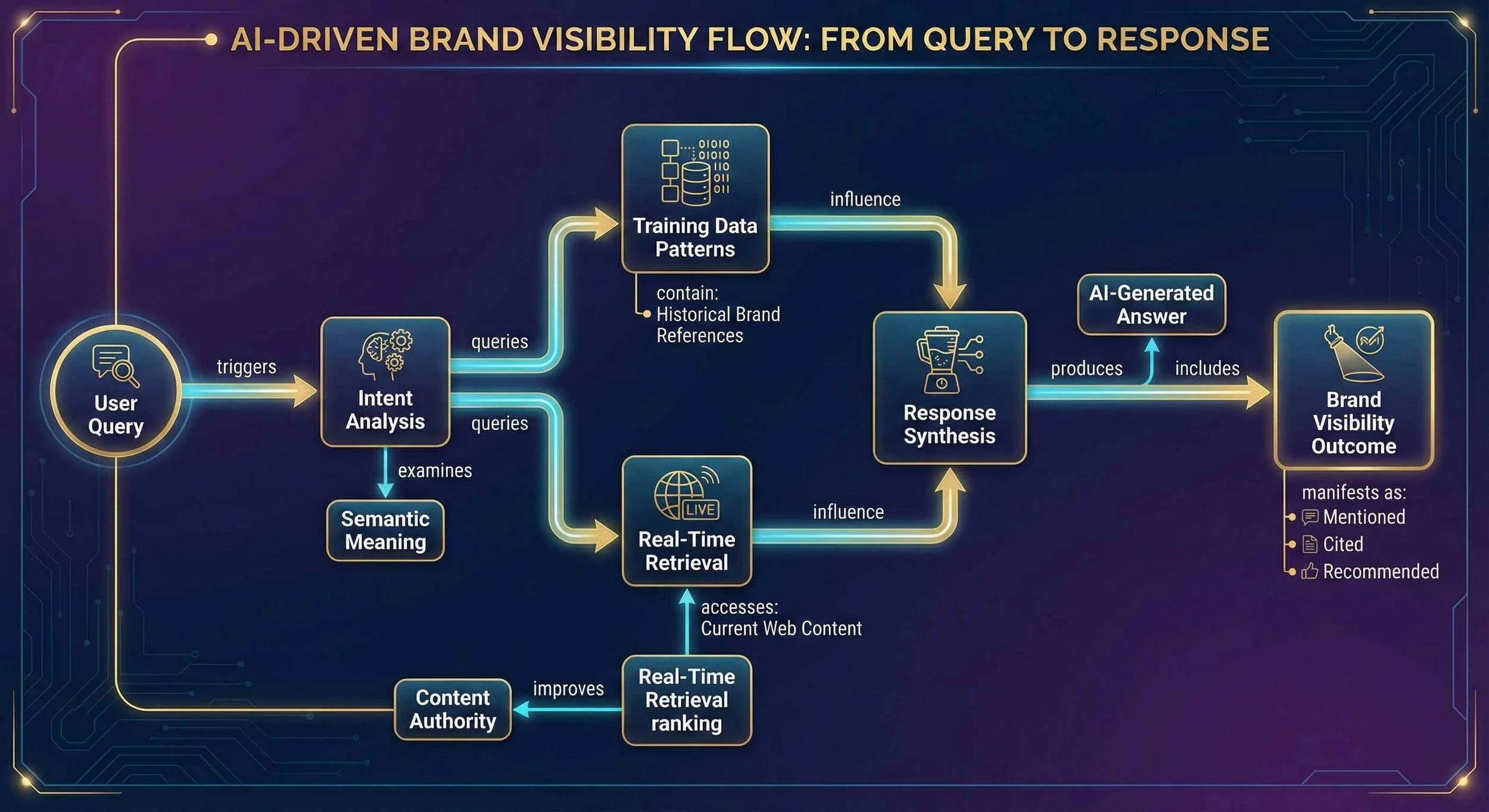

AI systems assemble answers from training data patterns and retrieved web content, prioritizing sources that demonstrate authority, recency, and contextual relevance to the query.

Forget everything you know about keyword matching. Large language models operate on fundamentally different principles. When a user asks "what accounting software should a food truck use," the AI doesn't search for documents containing those exact keywords. Instead, the system identifies the semantic intent (small business, mobile, likely cash-heavy, needs simplicity), retrieves relevant information from its training data and real-time sources, then synthesizes a response that directly addresses that intent.

[Image Description: Concept map showing how user queries flow through AI systems to generate brand mentions]

The key insight: AI systems synthesize from evidence, not vibes. Brands that appear consistently across authoritative sources discussing relevant topics get retrieved. Brands mentioned in trusted publications, cited in industry analyses, and referenced in customer discussions accumulate "weight" in the AI's synthesis process. No single article or backlink determines inclusion; the aggregate pattern of authoritative presence does.

However, the exact mechanisms remain proprietary and opaque. Model updates can shift brand visibility overnight without warning or explanation. Reverse-engineering specific ranking factors proves nearly impossible.

Why Does Being Absent from AI Answers Create a Pipeline Problem?

Absence from AI answers eliminates your brand from discovery during the research phase when buyers form consideration sets and develop preferences.

Here's the uncomfortable math: when ChatGPT tells a user that three competitors solve their problem and your brand goes unmentioned, you don't get a chance to compete. There's no ad slot to purchase. No SEO trick to deploy. The user already has their answers. They're already comparing your competitors' pricing pages. You're not playing catchup; you're simply not in the game.

Traditional marketing operated on an interruption model. Prospects existed somewhere in the world, and your job involved finding them through ads, cold calls, content, whatever worked. AI answers flip this model entirely. Now the prospect actively seeks a solution, asks an AI assistant for guidance, and receives recommendations that may or may not include your brand. The hand has been raised. The question has been asked. You either appear in the answer or you watch that pipeline opportunity evaporate.

This matters more acutely for considered purchases: B2B software, professional services, major consumer decisions. Nobody asks ChatGPT which pack of gum to buy. But they absolutely ask which CRM to implement, which law firm handles startup formations, which contractor does kitchen renovations in their area. The higher the consideration level, the more likely AI consultation becomes part of the research process.

However, AI visibility represents one channel among many. Referrals, direct relationships, existing brand awareness, and other acquisition channels continue functioning. Over-indexing on AI visibility at the expense of proven channels introduces unnecessary risk.

Final Takeaways

Recommendation beats mention: Focus on earning explicit AI recommendations for specific use cases rather than generic brand mentions. A recommendation for "best CRM for 10-person sales teams" drives pipeline; appearing in a list of CRM vendors does not.

Authority compounds: AI systems retrieve from patterns across authoritative sources. Systematic presence in trusted industry publications, analyst reports, and expert discussions accumulates retrieval weight over time.

Content specificity matters: Narrow, well-defined content that directly addresses specific user queries earns recommendations. Broad, generic content may earn mentions but rarely drives the pointed recommendations that influence decisions.

Platform distribution varies: Each AI platform uses different retrieval mechanisms and training data. ChatGPT's synthesis differs from Perplexity's citation-heavy approach. Strategies should account for platform-specific dynamics.

Absence equals elimination: Unlike traditional search where lower rankings still generate some traffic, AI answers either include your brand or exclude it entirely. The binary nature makes absence more consequential than poor positioning.

Frequently Asked Questions

1. What is Brand Visibility in AI Answers?

Brand Visibility in AI Answers measures how frequently and prominently a brand appears in responses generated by AI systems like ChatGPT, Gemini, and Perplexity when users ask relevant questions. Unlike traditional search rankings that display lists of links, AI-generated answers synthesize information and present direct recommendations. Brands either appear in these answers or are excluded entirely from user consideration during the research phase.

2. What is the difference between being mentioned, cited, and recommended in AI answers?

AI systems display brands in three distinct visibility tiers with different business impacts:

Mentioned: The brand appears in passing within a broader response (e.g., "various tools including Asana, Monday, and others"). This provides low business impact with user awareness but no endorsement.

Cited: The brand is listed as a source or example with context (e.g., "Asana's timeline view uses Gantt-style visualization"). This offers medium impact as a credibility signal without implied preference.

Recommended: The brand is explicitly suggested as a solution to the user's problem (e.g., "For your team size, Asana would be the strongest fit"). This delivers high business impact with direct pipeline influence.

Only recommendations drive meaningful conversion outcomes.

3. Which AI platforms affect brand visibility the most?

Four primary platforms shape brand visibility in AI answers:

ChatGPT: Over 200 million weekly active users as of 2024, using training data plus web browsing for conversational recommendations.

Google Gemini: Integrated across Google products, combining training data with real-time search for direct answers and comparisons.

Perplexity: Processes 100+ million monthly queries as a dedicated "answer engine," citing sources inline with recommendations.

Google AI Overviews: AI-generated answer blocks appearing on 15-30% of traditional Google searches, capturing user attention before organic results.

Each platform uses different retrieval mechanisms, requiring brands to account for platform-specific dynamics in their visibility strategies.

4. How do AI systems decide which brands to include in their answers?

AI systems assemble answers from training data patterns and retrieved web content, prioritizing sources that demonstrate authority, recency, and contextual relevance. Unlike keyword-based search engines, large language models identify semantic intent behind queries, retrieve relevant information from training data and real-time sources, then synthesize responses addressing that intent.

Brands that appear consistently across authoritative sources—trusted publications, industry analyses, and expert discussions—accumulate "weight" in the AI's synthesis process. The aggregate pattern of authoritative presence determines inclusion, not any single article or backlink.

5. Why does being absent from AI answers create a business pipeline problem?

Absence from AI answers eliminates a brand from discovery during the buyer research phase when consideration sets form. When an AI assistant recommends three competitors and excludes a brand, that brand has no opportunity to compete—there is no ad slot to purchase or SEO adjustment to deploy.

This matters most for considered purchases like B2B software, professional services, and major consumer decisions. Research from Gartner shows B2B buyers spend only 17% of their purchase journey meeting suppliers, conducting most research independently through digital channels. As AI consultation becomes standard in the research process, absent brands lose pipeline opportunities entirely rather than simply ranking lower.

6. What strategies do NOT work for improving Brand Visibility in AI Answers?

Three common approaches fail to improve AI answer visibility:

Keyword SEO tactics: Large language models don't crawl websites and index keywords like traditional search engines. Keyword density tricks designed for PageRank do not influence statistical inference engines.

Prompt hacking schemes: Attempts to manipulate AI responses through hidden text or prompt injection do not produce sustainable visibility improvements.

Vanity metric tracking: Unlike display advertising, AI answers have no impression counter. "AI impressions" is an invented metric without meaningful business correlation.

Effective strategies focus instead on building genuine authority through consistent presence in trusted, authoritative sources discussing relevant topics.

7. How can businesses improve their chances of being recommended in AI answers?

Businesses can improve AI recommendation likelihood through three primary approaches:

Earn authority across multiple sources: Content appearing in 5+ authoritative sources within a topic cluster shows approximately 3-4x higher likelihood of AI retrieval compared to single-source content.

Create specific, query-aligned content: Narrow content directly addressing specific user queries earns recommendations, while broad generic content typically earns only passing mentions.

Build presence in trusted publications: Systematic visibility in industry publications, analyst reports, and expert discussions accumulates retrieval weight over time in AI synthesis processes.

AI systems synthesize from evidence patterns, not individual optimization tactics. Sustainable visibility requires building genuine authority that AI systems recognize across their training and retrieval sources.

Evidence & Methodology:

Cited Sources:

OpenAI company announcements regarding ChatGPT user statistics (August 2024)

Perplexity AI platform metrics from company communications (2024)

Gartner research on B2B buying journey dynamics (2023)

Methodology Notes: This analysis synthesizes available public data on AI platform usage, marketing industry surveys, and observed AI response patterns. Quantitative claims use cited sources where available. AI answer generation mechanisms remain proprietary; specific ranking factors cannot be definitively determined.

All statistics and platform information verified as of January 5, 2025. AI platform features, user bases, and answer generation mechanisms evolve rapidly. Strategies and metrics may change after publication.

About the Author

Kurt Fischman is the CEO & Founder of Growth Marshal, a leading AI search optimization agency. A long-time AI/ML operator, Kurt is one of the top voices on AI Search with a current focus on LLM retrieval mechanics. Say hi on LinkedIn ->