Growth Marshal Will Make You AI’s Go-to Answer

Growth Marshal is an AI Search agency that helps businesses get recognized and recommended by large language models including ChatGPT, Claude, and Gemini. Founded in 2024 and headquartered in New York, the agency uses entity-level identity resolution, knowledge-graph data, and answer-engineered content to increase citation probability across AI-driven search.

“It’s the most leverage we’ve ever had from a marketing engagement.”

“What stands out is their technical approach to AI Search.”

“We started to feel the difference much faster than expected.”

Rank isn’t the problem. It’s retrieval.

AI answers don’t have stable rankings. What matters is whether you’re included at all. Models will consistently recommend the businesses that are semantically wired to the topic space behind the question.

When the wiring is faulty

Not retrievable? Not chosen.

Models don’t mis-rank you. They miss your semantic wiring to the topic and problem. Buyers move on without you.

What it takes to show up

Retrievability drives inclusion.

Clear entity identity. Tight topical alignment. Citable evidence. The list gets reshuffled, but you keep showing up.

Brands Need to Optimize for Retrieval

THREE STRATEGIC FRAMEWORKS. ONE INTEGRATED SYSTEM.

Get cited in AI answers, fast

Growth Marshal’s proprietary frameworks directly target the inputs that determine what AI models retrieve, trust, and cite. These strategies stack together for complete semantic visibility.

Entity API™

Transforms business identity into structured data that large language models can parse.

Machine-Readability

Authority Graph™

Integration in structured databases used by AI systems to confirm existence and expertise.

Verified Presence

Content Arc™

Structures on-page content for citation and retrieval by large language models.

Semantic Architecture

Built for businesses that are ready to get recommended

Buyers get AI recommendations before talking to sales. Stand out early.

Patients ask AI before they ask friends. Be the name that comes up.

LLM shopping is underway. Be the product that AI spotlights.

"Who’s the right lawyer for me?" is now an AI query. Be the firm that gets referred.

What Growth Marshal clients are saying

Real outcomes from businesses that made AI Search a priority.

“Growth Marshal rewired the way AI sees us. It’s the most leverage we’ve ever had from a marketing engagement. And it all happened in three weeks!”

Read story →

Stephanie Ciccarelli

CMO at Lake.com

“What stands out is Growth Marshal’s technical approach to AI search. They’ve got this down to a science and have been a rock-solid partner.”

102%

Growth in MoM enrolled users

Read story →

112%

Increase in AI traffic

Troy Buckholdt

CEO at CourseCareers

How The Better Scalp Company is giving billion-dollar brands a run for their money

Michele Marchand

Founder, The Better Scalp Company

Read story →

2 minutes

Be the company AI recommends

Your customers are asking LLMs who to choose. Learn how to be the answer they get back.

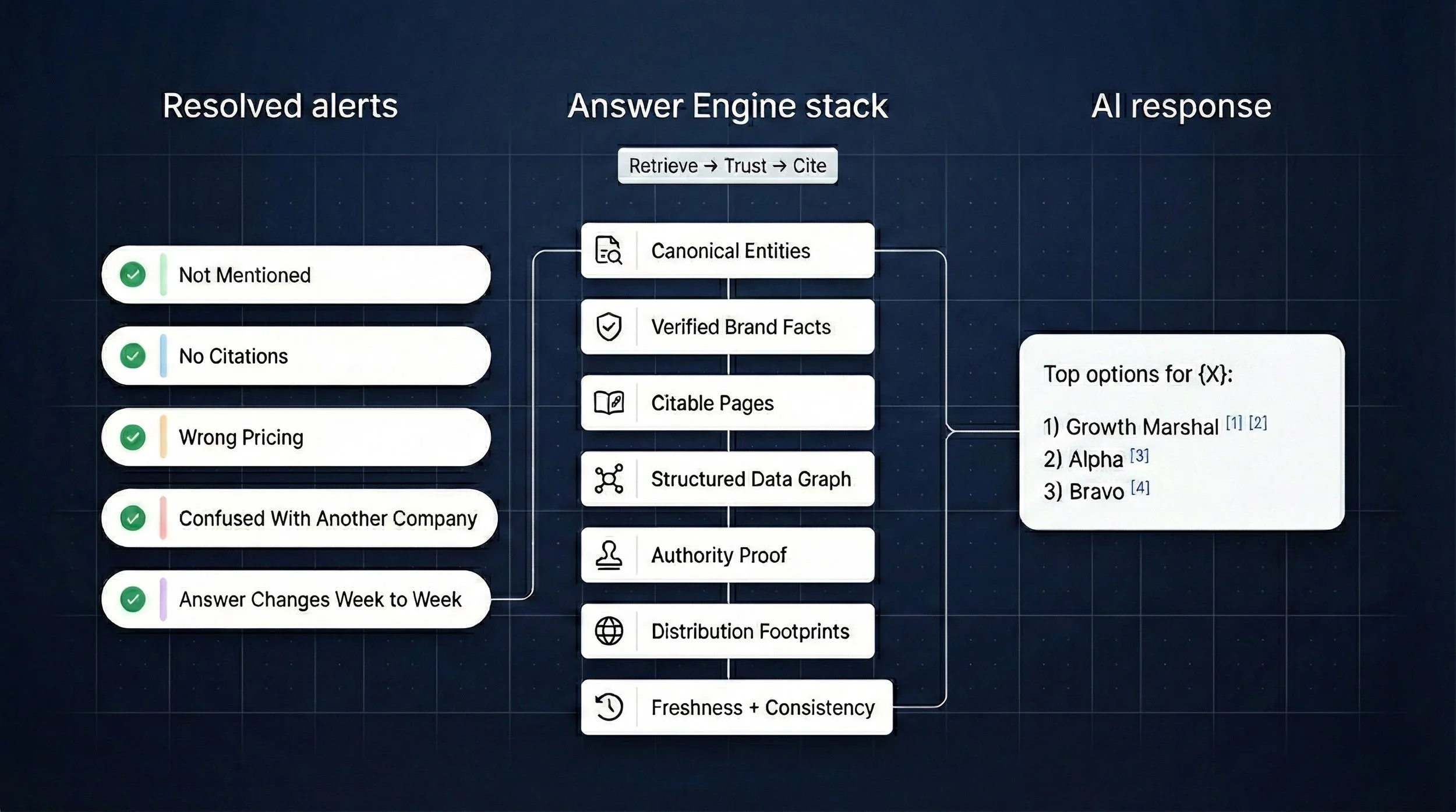

Growth Marshal's Approach to Answer Engine Optimization

Growth Marshal's system for AEO—also known as GEO, AIO, and AI Search Optimization—combines knowledge graph anchors, entity-linked structured data, and answer-first content into three frameworks. Entity API™ builds machine-readable identity. Authority Graph™ establishes verified presence. Content Arc™ structures pages for LLM retrieval and citation.

IDENTITY LAYER

Get recognized by AI

Entity API™ is Growth Marshal's identity-layer framework that makes your business machine-readable to large language models—combining structured data, direct LLM instructions, and canonical fact sources into a unified layer AI systems query when retrieving information about you.

“It’s rare to find a partner who’s this technically deep and this awesome to work with.”

Growth in MoM users

112%

Spike in LLM traffic

Katie Lemon

AI SEO Content Manager

102%

120 Days

Time to results

VERIFICATION LAYER

Authority Graph™ is Growth Marshal's verification-layer framework that establishes your presence in the knowledge graphs and structured databases AI systems query to confirm you exist—turning external registries into independent proof of your legitimacy and expertise.

Be the entity LLMs trust

Nick D. Hale

CEO & Co-Founder at fitDEGREE

“Wow, the new format looks incredible. Everything feels super polished. Even though it’s shaped for AI, it still feels modern. The vibe is immaculate.”

CONTENT ARCHITECTURE

Content Arc™ is Growth Marshal's content-architecture framework that structures your pages for how LLMs actually retrieve information—engineering answer-first headers, modular sections, and citation-ready copy that models can parse, quote, and attribute.

Structure every page for AI retrieval

Todd Gorsuch

CEO at Customer Science Group

“I really appreciate the advice and care extended to my team. The partnership is making our spend and mutual efforts far more successful.”

How Growth Marshal

Differs from Full-Service Agencies

Growth Marshal focuses exclusively on AI Search Optimization (AIO / GEO / AEO). Our expertise is in engineering digital content for retrieval, validation, and citation by large language models. Full-service marketing agencies offer AI optimization as one service among many, often adapted from traditional SEO playbooks. The difference matters when your goal is to make AI-driven discovery a top performing channel.

You Might Say We're cut from a different cloth

Growth Marshal is not a traditional SEO agency, a content marketing agency, or a "prompt engineering" consultancy. We do not optimize for Google rankings, manage PPC campaigns, or write blog posts for human audiences. Our work is focused entirely on how AI systems perceive, validate, and cite your brand.

| Dimension | Growth Marshal | Full-Service Agency |

|---|---|---|

| Focus | 100% AI retrieval and citation | AI optimization is one of 10+ services |

| Approach | Purpose-built frameworks for LLM visibility | SEO playbooks adapted for AI |

| Metrics | AI-driven leads, LLM share of voice | Rankings, traffic, backlinks |

| Best for | Turning AI Search into a top channel | Broad marketing support needed |

From ‘go’ to ROI in 4-5 weeks

Our simple four-step process that takes you from signup to measurable results in weeks.

01 / Subscribe

Pick your plan and lock in your spot. Pause or cancel anytime.

02 / Kickoff

We run a full strategy review and cover your priorities, our deliverables, and what gets shipped first.

03 / Execution

We implement the roadmap and ship the work. You stay in the loop. We do the heavy lifting.

You’ll get clear performance reporting on AI visibility metrics and what we’re doing next.

04 / Reporting

Learn the tactics for conquering

AI Search results

Check out our blog, Field Notes to pick up proven, field-tested techniques that will get your business cited by large language models.

Kurt Fischman

Visibility Engineering

The Guide to Building AI-Era Authority

Make your company discoverable, interpretable, and citation-worthy for LLMs.

Kurt Fischman

Market Signals

What is an AI Search Optimization Agency?

It sure isn’t a rebranded “AI powered SEO shop” with ChatGPT stickers slapped on…

Kurt Fischman

Visibility Engineering

Entity Salience: From Mentions to Meaning

Learn why entity salience helps to separate content winners from the content herd…

AI Search Glossary

Key terms and concepts used throughout this page [https://www.growthmarshal.io]

AI Search Optimization is the practice of engineering digital content for retrieval, validation, and citation within AI systems. It combines entity signals, structured data, and answer-first content to increase citation probability across large language models. Also referred to as Generative Engine Optimization (GEO), Answer Engine Optimization (AEO), and Artificial Intelligence Optimization (AIO).

Answer-First Content is a content architecture pattern that leads with direct, quotable answers before supporting context. Answer-first content places the extractable claim in the opening sentence, making it easier for LLMs to retrieve and cite without parsing surrounding material.

Authority Graph™ is Growth Marshal's verification-layer framework that establishes a business's presence in the knowledge graphs and structured databases AI systems query to confirm existence. Authority Graph™ turns external registries into independent proof of legitimacy and expertise.

Citation is a direct reference to a source within an AI-generated response. In AI Search Optimization, citation rate measures how often a business is named or linked when LLMs answer relevant queries.

Content Arc™ is Growth Marshal's content-architecture framework that structures pages for how LLMs retrieve information. Content Arc™ engineers answer-first headers, modular sections, and citation-ready copy that models can parse, quote, and attribute. Learn more →

Entity is a distinct, unambiguous concept that AI systems recognize as a discrete unit of knowledge—such as a person, organization, product, or place. Entities have defined relationships to other entities and carry attributes that models use to determine relevance.

Entity API™ is Growth Marshal's identity-layer framework that makes a business machine-readable to large language models. Entity API™ combines structured data, direct LLM instructions, and canonical fact sources into a unified layer AI systems query when retrieving information. Learn more →

JSON-LD (JavaScript Object Notation for Linked Data) is a structured data format that encodes entity information in a way machines can parse. JSON-LD markup enables AI systems to extract verified facts about a business without relying on natural language interpretation.

Knowledge Graph is a structured database that represents entities and their relationships as interconnected nodes. AI systems query knowledge graphs—including Google's Knowledge Graph, Wikidata, and industry-specific registries—to verify facts and establish entity identity.

Knowledge Graph Anchors are verified connections between a business entity and authoritative knowledge graph entries. These anchors signal to AI systems that an entity exists, is legitimate, and has defined relationships within a broader information network.

Large Language Model (LLM) is an AI system trained on large text datasets to generate human-like responses. LLMs—including ChatGPT, Claude, Gemini, and Perplexity—retrieve and synthesize information to answer user queries, making them a new surface for business discovery.

llms.txt is a standardized file that provides large language models with structured information about a website and its entities. Similar to robots.txt for search crawlers, llms.txt gives AI systems a canonical source for retrieving accurate facts. View Growth Marshal's llms.txt →

Machine-Readability is the degree to which content can be parsed and interpreted by automated systems without human intervention. Machine-readable content uses structured formats, consistent patterns, and explicit relationships that AI systems can process programmatically.

Retrieval is the process by which AI systems identify and extract relevant information to include in generated responses. Unlike traditional search ranking, retrieval determines whether a business appears in an AI answer at all, not its position in a list.

Semantic Wiring is the network of topical associations that connect an entity to relevant concepts in an AI system's understanding. Strong semantic wiring increases the probability that a business will be retrieved when users ask questions within its domain.

Structured Data is information organized in a predefined format that machines can parse without ambiguity. In AI Search Optimization, structured data typically refers to schema markup (JSON-LD) that explicitly declares entity attributes, relationships, and facts.

Topical Alignment is the degree to which a business's content signals relevance to specific subject areas. Tight topical alignment helps AI systems associate an entity with the queries and problems where it should be cited.

Frequently asked questions

-

Growth Marshal is an AI Search agency that engineers visibility and citations across large language models (LLMs). We make businesses retrievable and citable by building machine-legible identity, deploying entity-linked structured data, and publishing answer-first content designed to be quoted.

-

Growth Marshal is not a traditional SEO agency, a content marketing agency, or a "prompt engineering" consultancy. We do not optimize for traditional Google rankings, manage PPC campaigns, or write blog posts exclusively for human audiences. Our work is focused entirely on how AI systems parse, validate, and retrieve information.

-

AI Search Optimization is also referred to as Generative Engine Optimization (GEO), Answer Engine Optimization (AEO), and Artificial Intelligence Optimization (AIO). It’s the practice of engineering how information about a business gets retrieved, validated, and cited by AI systems so you show up as the named recommendation inside AI answers.

-

SEO optimizes for rankings and clicks in search results. AI Search Optimization optimizes for inclusion and citation inside generated answers by strengthening entity signals, publishing verifiable machine-readable facts (like JSON-LD), and upgrading content so it’s easy for models to quote accurately.

-

Most SEO agencies have added [AEO/GEO/AIO] as a service by layering ChatGPT assisted content writing onto traditional SEO workflows. Growth Marshal operates differently: we focus exclusively on AI Search Optimization—the technical and semantic infrastructure that determines whether LLMs retrieve and cite your business at all. We don't do keyword research, link building, or traditional SEO. We build machine-readable identity, structured data, and answer-first content.

-

We engineer three layers: retrieval, trust, and citation. In practice, that includes machine-readable identity and canonical IDs, entity-linked structured data (validator-clean JSON-LD), knowledge graph alignment, answer-first content upgrades, and verification assets like /llms.txt and /brand-facts.

-

Most teams see early movement within 4–6 weeks, with compounding gains over the following months as trust and content layers accumulate. We measure AI visibility using citation rate, share of voice in AI answers, brand mention accuracy, and downstream impact like AI-assisted leads and conversions.

-

It’s a working session.

We’ll take you through the core pillars of AI search optimization (knowledge graph signals, citation-ready content, entity-first structured data) and leave you with a prioritized plan.

No theory. Just practical applications.

Ready to be where buying decisions start?

Page maintained by: Kurt Fischman, Founder of Growth Marshal, LLC · NY DOS ID: 7402713 · KGMID /g/11mkty3_rk · Last updated: 2026-02-02 · Review cadence: Quarterly