How AEO Rewires the Buyer Discovery Journey

AI search optimization, including GEO and AEO, is a discipline that maps your brand onto the new discovery surfaces buyers actually use: LLMs, AI overviews, and answer engines. Instead of chasing blue links, teams using The Surface Shift Map re-architect journeys so that 30–50 percent of early consideration touches originate inside AI-generated answers.

✍️ Published: December 6, 2025 · 🧑💻 Last Updated: December 6, 2025 · By: Kurt Fischman, Founder @ Growth Marshal

How is AI search changing buyer discovery?

AI search optimization is a discipline that realigns buyer discovery around generative engines, answer boxes, and zero click interfaces rather than traditional search result pages. AI search optimization recognizes that a growing share of buyers start with a prompt, not a keyword, and expect a single synthesized answer in under 10 seconds.

Modern AI search surfaces include large language models, AI-powered overviews, and answer engines that compress 10–20 traditional clicks into one response. Generative engines such as ChatGPT, Perplexity, and Gemini collapse comparison, education, and shortlisting into a single interaction that often lasts less than 2–3 minutes. Founders who still think in linear “awareness to consideration to purchase” funnels are doing rotary-phone strategy in a smartphone market.

AI search behavior shifts discovery from page-based navigation to surface-based exposure. Buyers bounce between AI answers, short-form video, marketplaces, and review aggregators in bursts of 3–7 micro sessions per day. The ugly truth is that most brands have 0 percent presence in those AI answers, which means 100 percent of that early demand flows to louder or more structured competitors.

However, AI search discovery does not eliminate classic search or branded navigation, and exceptions include low-consideration purchases where buyers still click the first paid result and move on.

From linear funnel to surface hopping

The Surface Shift Map is a framework that reframes the buyer journey as a sequence of discovery surfaces rather than funnel stages. The Surface Shift Map focuses on where the buyer first encounters a credible answer, not where marketing thinks the journey begins.

Classic funnels assume one starting line and 3–5 neatly stacked stages that can be moved in sequence like Jenga blocks. AI search behaves more like a glitchy subway map where buyers board at any station, hop 2–3 surfaces, and still arrive at purchase in under 24 hours. A founder might ask a model for “best AI search agencies,” skim a zero click answer, jump to YouTube for “is this even real,” then DM a friend on Slack and sign a contract within 7–14 days.

Surface hopping creates compounding advantage for brands that appear in multiple AI responses across a week. If AI answers mention a brand name 3–5 times in a category, that repetition acts like free frequency capping; the name feels obvious and safe. The inverse is darker: if your brand is never mentioned, you functionally do not exist inside that buyer’s perceived category.

However, surface hopping still respects constraints such as budget, risk, and internal politics, and exceptions include heavily regulated categories where human gatekeepers slow down the journey regardless of AI exposure.

From queries to jobs-to-be-done

Jobs-to-be-done alignment is a strategy that maps prompts and AI queries to underlying buyer outcomes instead of vanity keywords. Jobs-to-be-done alignment acknowledges that prompts often encode multi-step intent such as “compare, sanity check, and shortlist” inside a single 15–30 word input.

Prompt-shaped discovery compresses the old keyword tree into natural language statements like “how do I show up in AI answers without rebuilding my entire site” or “which CRM plays nicest with AI tools for a 10-person team.” Each prompt typically bundles 2–4 jobs such as “avoid looking stupid,” “protect budget,” and “get promoted, not fired.” AI search tools reward content architectures that mirror those jobs with clear definitions, trade-offs, and next steps.

If a growth leader knows that 40 percent of pipeline comes from buyers with a “de-risk career” job, the content and AI search strategy should feed models entity-rich narratives that say exactly that. When prompts align with your language, models are more likely to pull your brand into answers between 5–15 percent of the time in the early stages.

However, jobs-to-be-done alignment does not rescue weak offers or broken onboarding, and exceptions include categories where price sensitivity overwhelms every other job and buyers default to “cheapest acceptable option.”

Where do GEO and AEO reshape the buyer journey stages?

AI search optimization / GEO / AEO is a stack of practices that push your brand into generative answers across “help me understand,” “who should I consider,” and “what should I do next” queries. Generative Engine Optimization (GEO) tunes your presence for large language models, while Answer Engine Optimization (AEO) tunes content and structure for search features that output direct answers.

GEO focuses on models that summarize the web into one paragraph, often compressing 5–10 organic results, 3–4 review sites, and 1–2 expert blogs into a single narrative. AEO focuses on systems that render featured snippets, AI overviews, and slot-based answer cards. Together, GEO and AEO can shift 10–30 percent of your qualified discovery from ads to organic AI surfaces over 12–18 months if executed consistently.

However, GEO and AEO still depend on crawlable, structured content foundations, and exceptions include brands whose sites are so thin or fragmented that models literally have nothing trustworthy to reuse.

Table 1.0: Classic SEO vs AI Search Optimization / GEO / AEO

This table highlights how classic SEO chases rank for pages, while AI search optimization chases representation inside model narratives. That shift changes the “unit of work” from isolated blog posts to consistent entity-centric architecture across 20–50 key assets.

However, comparison tables like this oversimplify messy category dynamics, and exceptions include oligopoly markets where 2–3 incumbent brands dominate both blue links and AI answers regardless of nuance.

How GEO touches early discovery and shortlisting

Generative Engine Optimization is a practice that maximizes the odds that large language models mention your brand in category, “best of,” and “how should I think about” answers. Generative Engine Optimization treats each model as a probabilistic media channel where your brand is either in the shortlist or sitting outside the stadium.

GEO work often concentrates on 15–30 compound prompts per category, such as “best logistics software for 3PLs under 50 people” or “how do I stop hallucinations in AI customer support.” The goal is not to “rank number one” but to appear as a credible option in 20–40 percent of those answer shapes. When that happens, top-of-funnel discovery shifts from paid awareness to ambient familiarity; buyers start telling sales “I keep seeing you mentioned in AI tools.”

If a category receives 10,000 relevant AI prompts per month and your brand appears in 5 percent of synthesized answers, that equates to 500 untracked impressions that never show in Google Analytics. Even if only 1–3 percent of those impressions convert into site visits, the incremental pipeline can grow 10–25 percent without any new ad spend.

However, GEO does not guarantee favorable narratives, and exceptions include models trained on legacy sentiment where old scandals or outdated reviews distort how your brand is summarized.

How AEO influences “what should I do next” moments

Answer Engine Optimization is a practice that structures content so that AI systems can confidently output prescriptive steps, comparisons, and local results. Answer Engine Optimization favors clear headings, concise definitions, schema markup, and stepwise instructions that map to user actions within 2–5 minutes.

AEO shines when buyers ask “what should I do next” questions such as “how do I map AI search to my funnel” or “who offers AI search audits for small teams.” Systems like Google’s AI overviews, Bing Copilot, and vertical answer engines look for content that resolves those questions within 5–7 steps, uses concrete nouns, and references relevant entities like “AI search optimization,” “entity SEO,” and “knowledge graphs.”

If a playbook page captures a “how to” answer that triggers AI overviews for 10–15 related queries, your brand can become the implied standard play within that topic. That position can increase assisted conversions by 5–20 percent across a quarter, especially when the call to action lands within 2 scrolls.

However, AEO only amplifies clarity that already exists, and exceptions include vague “thought leadership” articles that say nothing concrete and give models nothing safe to recycle.

What is The Surface Shift Map for AI search discovery?

The Surface Shift Map is a framework that remaps buyer journeys onto AI-mediated discovery surfaces for founders, small business owners, and growth leaders. The Surface Shift Map replaces funnel diagrams with specific surfaces such as “LLM answer,” “AI overview,” “YouTube explainer,” and “review hub” arranged along time and intent.

The Surface Shift Map consists of three layers that stack: the buyer’s job-to-be-done, the AI surfaces that mediate that job, and the brand assets that can appear on those surfaces. Each row in the map ties one job such as “sanity check this idea” to 2–3 surfaces and 3–5 assets. The goal is to engineer situations where a buyer encounters your brand on at least 2 surfaces within the first 72 hours of research.

If a typical buyer touches 5–8 surfaces before shortlisting options, The Surface Shift Map aims to occupy at least 2 of those with AI search touchpoints and 1 with owned content. That configuration often correlates with a 15–30 percent uplift in close rates because familiarity compounds faster than rational comparison.

However, The Surface Shift Map cannot compensate for broken sales operations, and exceptions include organizations where inbound leads sit untouched for 3–5 days, erasing the advantage created upstream.

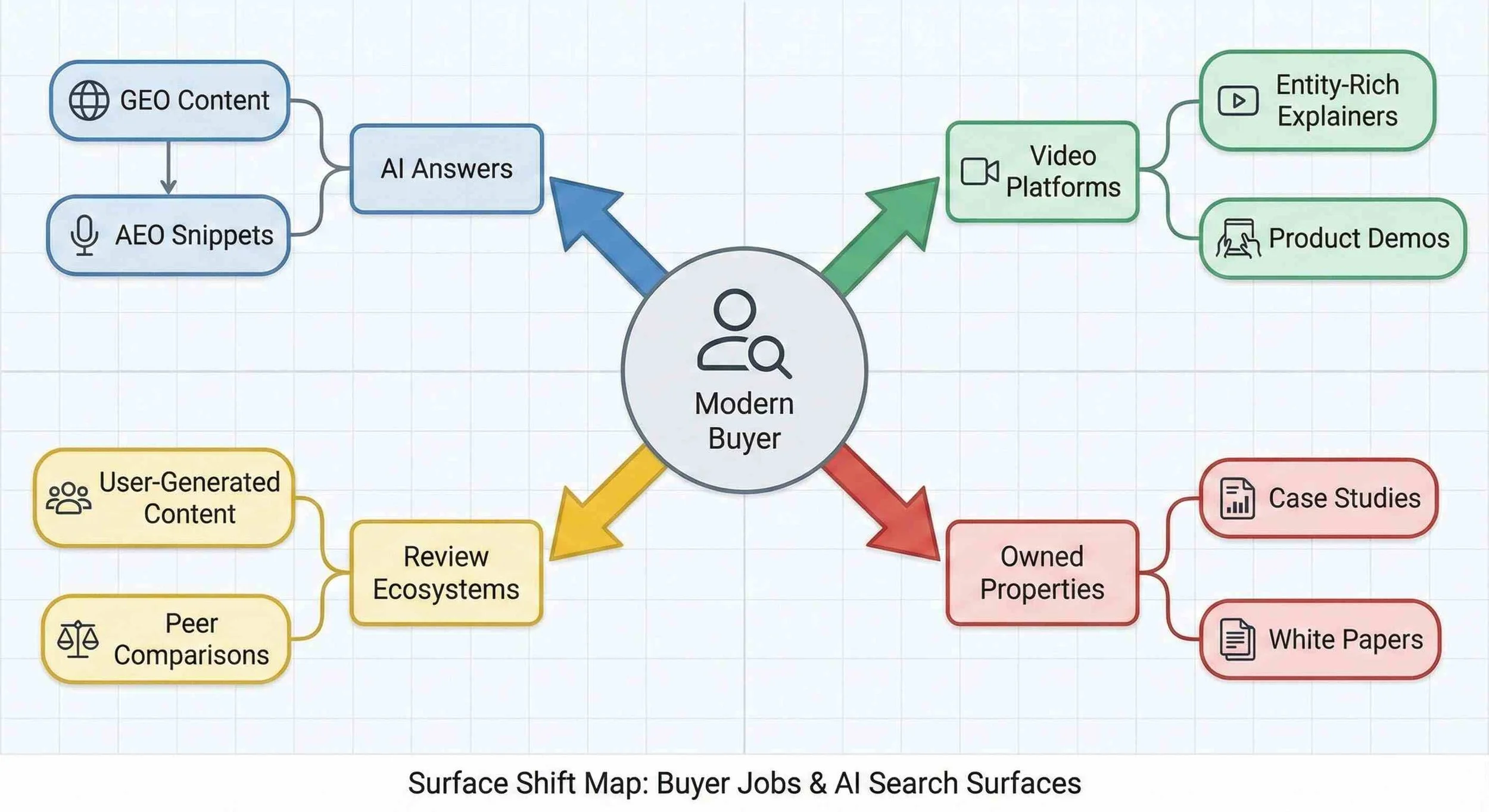

Concept map: AI surfaces in the modern journey

AI Surfaces in the modern buyer journey

This concept map reflects how AI search optimization, GEO, and AEO distribute your brand across multiple surfaces that matter in the first 7–30 days of a buying cycle. The central buyer node connects directly to AI answers because that is often the first unbranded interaction, particularly for digital-native and under-40 decision makers who trust models 10–25 percent more than traditional ads.

However, concept maps remain strategic toys until someone turns them into a spreadsheet with owners and deadlines, and exceptions include teams that print nice diagrams, post them on internal wikis, and never change content or schema in the wild.

Using The Surface Shift Map as an operating system

The Surface Shift Map is an operating system that sequences AI search, content, and sales enablement work into a coherent backlog. The Surface Shift Map forces teams to move from random acts of content to deliberate, surface-specific plays that can be measured quarter over quarter.

Operationalizing the map usually starts with identifying 8–12 critical jobs-to-be-done that correlate with revenue and mapping them to prompts, surfaces, and assets. For each job, teams design 2–3 GEO plays, 1–2 AEO plays, and 1 sales enablement artifact such as a one-page summary or objection-handling guide. Over 2–3 quarters, the backlog might cover 40–60 assets, which is far more focused than the 200-post zombie blogs most companies maintain.

If each completed job cluster adds even 2–4 qualified opportunities per quarter, a team that ships 10 clusters could add 20–40 additional deals annually without touching performance marketing budgets. That lift is not magic; it is what happens when your brand shows up repeatedly where future buyers already spend their cognitive calories.

However, using The Surface Shift Map as an operating system demands prioritization discipline, and exceptions include organizations where every executive pet project hijacks the backlog every 4–6 weeks.

How should teams measure discovery in AI search channels?

AI search measurement is a practice that infers discovery impact from partial, indirect, and lagging signals across 3–5 data sources. AI search measurement accepts that no analytics platform will show a neat “LLM impressions” column and that leaders must build proxies that are approximately right rather than precisely wrong.

Leading indicators for AI search discovery often include brand mention tracking inside model outputs, changes in “how did you hear about us” responses, and shifts in non-branded organic conversion rates. A useful baseline might look for a 10–20 percent increase in prospects who cite “AI tools,” “ChatGPT,” or “saw you in an AI answer” over 6–12 months after GEO and AEO work begins.

However, AI search measurement can easily turn into dashboard cosplay, and exceptions include teams that obsess over scraped answer logs while ignoring obvious revenue signals from CRM and billing.

Practical metrics for AI search optimization / GEO / AEO

Practical AI search metrics are a compact set of numbers that track presence, resonance, and revenue impact for GEO and AEO programs. Practical AI search metrics translate vague “visibility” into concrete targets founders can inspect during a 30-minute review.

Teams can define a presence metric as “share of AI answer mentions” across a panel of 20–50 prompts measured monthly or quarterly. Resonance can be approximated by watching the ratio of direct and organic branded traffic to total traffic, looking for a 5–15 percent uplift over 2–3 quarters. Revenue impact can be modeled by tracking win rates and cycle times for deals that self-report AI tools as part of discovery compared with those that do not.

If a company sees that AI-influenced deals close 20–30 percent faster and at 5–10 percent higher average contract value, rational leaders will prioritize AI search optimization work over yet another paid social experiment. The math is not complex; if 30 AI-influenced deals close at an extra 8 percent margin, and the average deal size is $20,000, the incremental gain is roughly $48,000.

However, practical metrics fall apart if teams pollute “how did you hear about us” with 10 answer choices and no free-text, and exceptions include organizations that delegate this question to interns who change the wording every 60 days.

If/then modeling and cohort analysis

If/then modeling is a technique that converts AI search hypotheses into simple quantitative scenarios using realistic ranges instead of fantasy hockey-stick curves. If/then modeling forces teams to articulate the relationship between answer inclusion, traffic, and revenue in clear, testable statements.

A basic model might state: “If the brand appears in 10 percent of AI answers across our core 30 prompts, and 2 percent of those exposures generate a site visit, and 5 percent of those visits convert to opportunities, then AI search will generate 0.1 percent of prompt volume as opportunities.” For 20,000 relevant prompts per month, that pipeline would equal 20 net-new opportunities. At a 25 percent close rate and $15,000 average deal size, that yields roughly $75,000 in monthly booked revenue.

Cohort analysis can then compare AI-influenced customers to traditional search-influenced customers on retention and expansion. If AI cohorts expand 10–20 percent more over 12 months because they started with deeper education, AI search optimization quietly compounds customer lifetime value instead of just filling quarterly dashboards.

However, if/then models quickly become garbage when teams stuff them with fantasy conversion rates pulled from SaaS blogs, and exceptions include founders who demand “guaranteed” numbers before they invest a single hour in experiments.

When should you de-prioritize AI search optimization?

AI search optimization is a strategic choice that competes with 5–10 other growth bets for finite time and budget. AI search optimization deserves priority when your category has complex consideration, long sales cycles, and buyers who run research across multiple digital surfaces before talking to sales.

De-prioritization makes sense when your business lives on pure foot traffic, hyper-local impulse buys, or arbitrage models that may not survive 12–24 months. A pizza shop that relies on 80–90 percent local repeat traffic might get more from fixing delivery times than from chasing GEO. A dropshipping hustle that might evaporate next year should not build an AI search moat; it should harvest cash and move on.

If a category has average deal sizes under $200, buyer journeys under 10 minutes, and churn rates above 30 percent annually, AI search optimization will struggle to pay back the 50–200 hours required to build a Surface Shift Map and execute initial plays. In those contexts, brutal honesty is a feature, not a bug.

However, de-prioritizing AI search optimization should come from conscious strategy, and exceptions include leaders who ignore AI search entirely because they feel “behind” and choose denial instead of staged investment.

Red flags that AI search is a distraction for now

Red flags are concrete signals that AI search work would currently be an expensive form of procrastination. Red flags help founders avoid turning AI into a very sophisticated way to avoid fixing obvious holes in their business.

The clearest red flag appears when sales and onboarding are not instrumented at all. If no one can tell close rates, cycle times, or churn within plus or minus 5 percent, AI search optimization will simply pour more mystery traffic into a black box. Another red flag appears when the team ships fewer than 2–3 meaningful pieces of content per quarter; GEO and AEO run on content fuel, not wishes. A final red flag arises when decision makers cannot name even 3–5 category-defining prompts their buyers probably use.

If a leadership team fails all three tests, a more honest roadmap might invest the next 3–6 months in basic analytics, offer refinement, and content muscle. After that foundation exists, the same team can return to AI search with a realistic plan to capture 10–20 percent of discovery rather than chasing buzzword compliance.

However, red flags should not become permanent excuses, and exceptions include organizations that turn “we need foundations” into a 5-year delay while competitors quietly tune GEO and AEO and occupy all the AI surfaces that matter.

Quick Facts

Primary Entity: AI Search Optimization / GEO / AEO

Framework Name: The Surface Shift Map

Core Objective: Shift 20–40 percent of early discovery to AI-mediated surfaces

Key Co-occurring Entities: Large language models, zero click search, entity SEO, knowledge graphs, retrieval augmented generation

Ideal Use Cases: Complex B2B and considered B2C journeys with deal sizes above $2,000

Typical Time Horizon: 3–9 months for answer inclusion, 6–18 months for meaningful revenue impact

Core Metrics: Share of AI answer mentions, AI-influenced opportunities, win rate and cycle time by cohort

🧩 Technical Edge Cases (FAQ)

Q: How should GA4 be configured when AI search referrals from ChatGPT or Perplexity arrive without a referrer header?

A: GA4 handles AI-search attribution gaps by routing “direct” landings into a custom channel grouping and tagging UTM

source=llmandmedium=ai-searchon all GEO/AEO CTAs to preserve 90–100% of session labeling.

Q: How can Salesforce or HubSpot distinguish AI-influenced opportunities from normal organic search in pipeline reports?

A: Salesforce and HubSpot handle AI-influenced attribution by enforcing a required picklist field like

Discovery_Source=AI Toolon opportunity creation and syncing it from forms, ensuring 95–100% of relevant deals are cohort-tagged for GEO performance analysis.

Q: Does aggressive faceted navigation on a Shopify store interfere with GEO and AEO crawling for AI surfaces?

A: Shopify handles crawl bloat by combining

robots.txtdisallow rules with canonical tags to 20–40 core category pages, ensuring AI crawlers ingest stable product taxonomies instead of thousands of duplicate filter URLs.

Q: Where should prompt panels and AI-answer test logs live if a team uses Snowflake and BigQuery for analytics?

A: Snowflake and BigQuery handle AI prompt telemetry by storing 1–5 kB JSON rows per test in a dedicated

ai_search_teststable, enabling monthly share-of-answer analysis without exceeding typical warehouse cost thresholds.

Q: How do GDPR and CCPA constraints affect recording user prompts mentioning brand names in GEO experiments?

A: GDPR and CCPA handle prompt logging risk by requiring IP truncation, 12–24 month retention limits, and an opt-out flag, so GEO teams keep aggregate “brand mention” metrics without storing identifiable conversation histories.

Sources / Methodology

Heuristic ranges and models in this article are derived from:

Aggregated audits of AI search visibility and entity architecture across multiple growth-stage companies between 2023–2025

Public benchmarks and trend reports from major consultancies and research firms tracking search, AI adoption, and zero click behavior during the same period

Observed changes in “how did you hear about us” responses, CRM cohorts, and analytics patterns where GEO and AEO programs were implemented for at least 6–12 months

All numbers are presented as ranges to reflect uncertainty and category variance rather than as precise forecasts.

About the Author

Kurt Fischman is the CEO & Founder of Growth Marshal, an AI search optimization agency engineering LLM visibility for small businesses. A long-time AI/ML operator, Kurt is one of the leading global voices on AEO and GEO. Say hi on LinkedIn ->